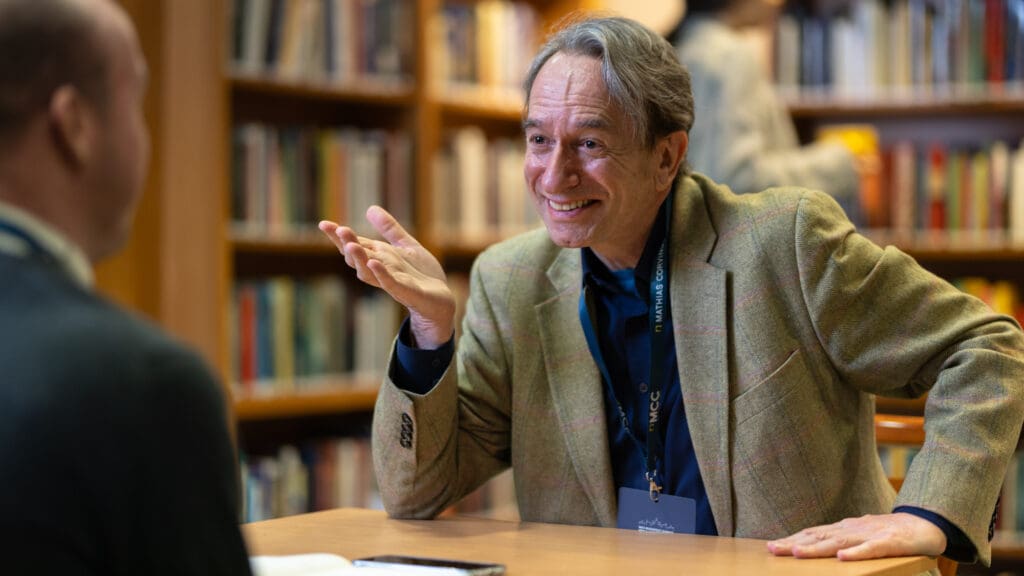

Martin Robinson is an author, consultant, and speaker with a wealth of experience in academia, curriculum development, and educational consulting. A dedicated professional committed to enhancing educational practices and preparing young minds for the future, he is professor at the Academica University of Applied Sciences, Amsterdam, Netherlands and fellow at the Royal Society of Arts. During the MCC Summit on Education, Hungarian Conservative had a chance to sit down with him to talk about AI usage in the classroom and its incorporation into education.

How do you think AI is shaping or is going to shape the future of education?

A relevant quote comes to mind from a song called ‘The Ballad of Accounting’, which is a folk song by someone called Ewan McColl & Peggy Seeger who are very much to the left, but they asked this very important question. And that is: ‘were you the maker or the tool?’ And this came to me as being quite a good way of talking about technology as a whole, let alone AI. In other words, you know, that moment when you want to grab your phone, and you want to scroll through that app, or something like that, who’s in charge of that relationship? Is it the phone, or you? And to me AI is one of these things. It could be a tool that you use, but it’s in danger of being a tool that uses you. And getting that relationship right is vital. And that’s going to be like the actual deciding point, I guess, in the future.

What do you think of the current practical benefits in education of AI in education?

Personally, I think ignore it as much as possible. But, I mean, again, there’s a whole raft of things that, particularly management in education, are bringing it in for reducing workload. You know, it is the same thing they said about the email. And what happened with email? Did it reduce workload? No. It made it easier, more accessible to you. So now, instead of working when you’re at work, you work all the time.

So I think this is where the maker of the tool thing becomes important. Because if a management system pushes it on teachers, they’ll be worked more. This is the lesson we get, it will be more and more work, even though it should be reducing your work. So there are other threats as well. If it doesn’t increase your workload, it’ll replace you. So you’re caught between those two.

It obviously would put more workload on teachers and educators at the introduction, because they would have to adapt and evolve with it. In your opinion, would that be an easy transition for teachers?

It’s being done. So teachers who I know are teaching by using AI to prepare the lesson. So it tells you what to do in the lesson as a teacher, if constructs questions to ask. It also sets the homework and writes an essay question. The essays come back from the pupils. It can mark the essays and put them into rank order. You know, which is best which is worse, in between, and give them a grade. The big flaw in the whole thing is that those pupils have gone home and used AI to write the essay.

So what we’ve done is take the human out of the system.

I love it, you know, a great degree of people are using AI. Everyone’s doing it. But no one’s admitting it, everyone’s pretending they’re not. So it’s brilliant. You know, everyone’s lying about it. But we’re running the pretence that we’re still doing useful things by being at least present in the classroom. I also asked this question in the panel discussion. In the scenario I outlined, who was cheating, was it the teacher or the pupil? Or both?

There is another debate around AI, which is the ethical one. I would just like to know your opinion. How ethical is it? Because as you described it, it is cheating in certain areas.

Is any of it cheating? I mean, is using a book cheating? If you copy it, it is cheating. If you sort of put it into your own words, it’s okay. I think these are grey areas. I think the ethical thing about any of this is it has to be part of a continual ethical conversation. What you can’t let it do is just put it in the hands of one group of people who then drive it, whether it be Silicon Valley, whether it be the Chinese government, whether it be the EU, whoever it is, you don’t want them to be in charge of it telling other people what to do, since there are some ethical concern in it. A lot of the capitalist argument is to let the market do that. I don’t know if it can. I don’t know if the market is that subtle. I think it responds to exploitation too much. So you got to be careful with the possibility of it.

I think in conservatism there’s this big schism between the moral conservative and the market conservative.

Roger Scruton, the philosopher talked about this a lot. Because he hated popular culture, but he had to admit it was part of capitalism where the money is being made, and therefore, where the money is being made, your ethical systems can collapse. Everything can fall apart, because this is the way the market is going. And it goes in that direction. So the ethical concern, where does that come from? Who is in charge of that? Should it be the church, the state, the people?

There are currently proposals and in the EU about regulating AI usage. Do you think it should be regulated?

I’m now caught in that schism, because you’ve also got censorship. And the difference between regulation and censorship is often also muddied. But what hope we would have is making it a continual conversation that people continue to look at whether that is the right thing to do or was it enough. I worry about this. As an example, the phone, which isn’t AI, but now has AI on it: the worry is that we let children have these things from a young age, with no censorship at all. And what they can access so easily. It is quite frightening.

And even if a parent stops them, that kid next door has still got the phone that their parents don’t know what they send to you on. So you need to regulate. But you can’t just do it in one nation, because you’re playing games with people all around the world. So unless it’s just gaming, let alone once you add social media and things like that, that is when you have to think in terms of big blocs of powerful AI nations if you like, which, I suppose, is why the EU is a big enough entity to take it on. It’s difficult. It’s so difficult. But yeah, we’re talking about censorship. And then do we have censorship at that level but then there’s a dark web, and all those other things that are accessible as well, which are illegal. But there it is. Illegal, but everyone can access it if they really want to.

What about ChatGPT? Do you think it is going to diminish or even ruin the capabilities of students to write or to even comprehend large texts?

(Reporter’s note: At this point Mr Robinson pulled out his phone and asked its AI assistant to summarize his book titled Athena versus the machine. The quotation in italics is what the AI answered.)

Right. Could you summarize Martin Robinson, Athena versus the machine please?

‘Absolutely. In his book Curriculum: Athena versus the machine, Martin Robinson makes a distinction between two types of education, the Athena curriculum, which is rooted in wisdom and a pursuit of knowledge for its own sake, and the machine curriculum, which views education as a means to an end, often focusing on job skills and economic productivity. Robinson advocates for a return to an Athena style curriculum, arguing that it leads to a more well rounded, thoughtful and engaged citizenry.’

It wasn’t quite right, but it’s sort of there. But it didn’t explain the depth of the argument on creating in the book, so it doesn’t understand it. Could I get away with that? In an Amazon review, I probably could. But now, as a teacher, if I start discussing it with that student, who wrote that, who took that and put it in an essay, I would find out how much they had read and understood the book. So I think the importance then is also not just the idea of them cheating, but the importance of speaking in the classroom and examining ideas, and having a spoken place where we come together, and discuss and debate and think.

Are there in your opinion exciting possibilities with AI enhancement in education? Or is it going to remain the same as it is?

I think, again, it’s the thing about making it very general about all education. I don’t know, but I think in certain topics like the sciences, particularly in mathematical subjects, medicine, its ability to handle data and to spot things that maybe a human wouldn’t is fascinating.

Because by putting reading data to that level it can be used as tool that you could teach in medical schools and perhaps in lower down a bit in the sciences and how to use this tool in certain ways. So an extension is there, and having that as a way forward is possible, but I don’t think necessarily in the arts.

But then the other day I was listening to this musician who was using AI to create music. The thing is, there he is: he’s a musician in charge of the machine. So this thing about the maker of the tool, he’s in charge of the tool, he’s using it to make music. I think that’s okay. That is different than AI making music, or it making a picture. You know, when it says do a Picasso version of this thing here, it does the chair. That’s not thinking. That’s just dreadful.

Related articles: